AI watermarking is reshaping copyright law enforcement by embedding invisible markers into digital content. These markers help verify ownership, trace content origins, and distinguish human-created works from AI-generated ones. As of January 2026, the U.S. Copyright Office and global regulators are addressing how this technology fits into legal frameworks. Here’s what you need to know:

- What It Does: AI watermarking embeds undetectable patterns into digital media, enabling automated tracking and ownership verification.

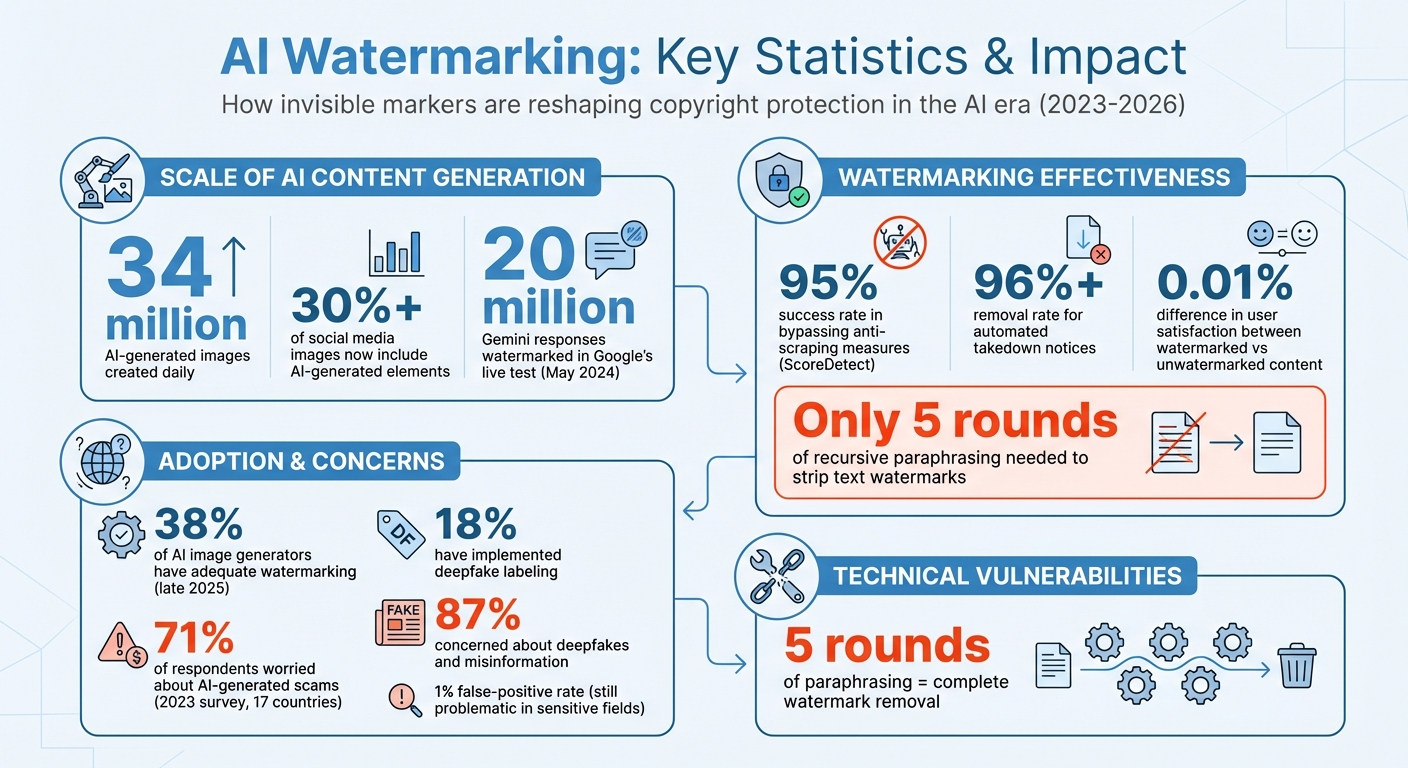

- Why It Matters: With AI-generated content surging (e.g., 34M daily images on DALL-E 2), watermarking helps combat misuse, protect creators’ rights, and authenticate content in legal disputes.

- Challenges: Watermarks can be fragile – simple edits like paraphrasing or cropping may erase them. Proprietary tools and inconsistent global standards complicate enforcement.

- Legal Use: Courts are beginning to accept watermarked content as evidence, provided creators document its use and maintain clear records.

- Future Trends: Universal detection standards and stronger global regulations will likely drive broader adoption.

AI watermarking offers a promising solution for copyright protection but comes with technical and legal hurdles. Businesses and creators must stay informed to safeguard their work effectively.

AI Watermarking Statistics: Adoption Rates, Detection Success, and Market Impact 2023-2026

AI Music Copyright: The Watermarking Solution Explained

sbb-itb-738ac1e

How AI Watermarking Works with Copyright Laws

AI watermarking is a game-changer for copyright law enforcement. By embedding invisible markers into digital content, this technology equips creators with tools that align with existing legal frameworks while tackling modern challenges. In January 2025, the U.S. Copyright Office confirmed that current copyright principles are flexible enough to cover AI-generated content without requiring legislative changes [7]. This clarification highlights watermarking’s legal importance. Let’s explore how this technology supports ownership claims and improves enforcement.

Proof of Ownership and Authenticity

Watermarking embeds an invisible marker within digital content, linking it to its human creator [2][3]. This is crucial because U.S. copyright law only protects works where a human author provides "sufficient expressive elements." Watermarking makes it easier to differentiate human-led creations from entirely machine-generated outputs [7]. Shira Perlmutter, Register of Copyrights and Director of the U.S. Copyright Office, elaborated on this:

"Our conclusions turn on the centrality of human creativity to copyright. Where that creativity is expressed through the use of AI systems, it continues to enjoy protection" [7].

Provenance standards like C2PA add another layer of reliability by cryptographically binding metadata to media files. This creates a verifiable record of who created the content and tracks any edits made later [1]. Even China has implemented mandatory watermarking for generative AI content to ensure transparency and traceability [1]. For companies managing large-scale digital assets, platforms like ScoreDetect combine watermarking with blockchain to produce legally defensible proof of ownership. This technology strengthens copyright enforcement by providing concrete evidence.

Improving Copyright Enforcement

Watermarking shifts copyright enforcement from a reactive process to a proactive one. By embedding identification data during content creation, watermarking enables automated detection – even if the content is altered [4]. This is especially relevant as more than 30% of social media images now include AI-generated elements [4].

In May 2024, Google DeepMind showcased the effectiveness of watermarking with its SynthID-Text system. During a live test involving nearly 20 million Gemini responses, watermarking maintained high detection accuracy without affecting content quality. User satisfaction ratings between watermarked and unwatermarked content differed by a mere 0.01% [3]. Following this success, Google integrated SynthID-Text into its Gemini platforms, making large-scale tracking of AI-generated text a reality.

Platforms like ScoreDetect’s Enterprise plan take this a step further by combining watermarking with intelligent web scraping. This approach achieves a 95% success rate in bypassing prevention measures and automates takedown notices, which see over 96% removal rates. Given the staggering 34 million AI-generated images created daily [4], this technology handles a scale that manual monitoring simply can’t.

Legal Recognition of Watermarked Content

Courts are starting to accept watermarked content as evidence, but with some limitations. The U.S. Copyright Office’s January 2025 report clarified that AI-assisted works are copyrightable if a human author contributes creative modifications, but not if they merely provide prompts [7]. Watermarking helps establish this distinction by offering measurable proof of content origin.

However, legal experts warn that watermarks should serve as "a flag of suspicion" rather than conclusive evidence [5]. Chad Heitzenrater, Senior Information Scientist at RAND Corporation, explained:

"The presence of a mark is less a definitive indicator of AI-generated content, and more a flag of suspicion" [5].

There are technical challenges as well. tactics like paraphrasing text, cropping images, or converting file formats can weaken watermark signals [2]. Additionally, most watermarking tools are proprietary – Google’s watermarking, for example, can usually only be verified using Google’s detection systems [2]. To address this, creators must document their use of watermarking tools and retain access to the relevant detection algorithms.

Despite these hurdles, regulatory developments are paving the way for broader acceptance of watermarked evidence. In July 2023, seven major AI companies, including OpenAI, Alphabet, and Meta, pledged to the White House to develop robust watermarking methods. This commitment led to an Executive Order on AI, directing the Department of Commerce to establish standards for labeling AI-generated material [2]. As these frameworks evolve, courts are likely to rely more on watermarked content – provided creators can demonstrate proper implementation and maintain a clear chain of custody.

Challenges of AI Watermarking in Copyright Protection

AI watermarking has emerged as a promising tool for copyright enforcement, but it’s far from a perfect solution. Its effectiveness is hindered by technical weaknesses, varying international laws, and ethical dilemmas. Understanding these obstacles is essential for creators looking to implement watermarking strategies effectively.

Technical Vulnerabilities

Despite their potential, watermarks are surprisingly fragile. For text-based content, techniques like recursive paraphrasing can erase watermarks completely. Research indicates that just five rounds of recursive paraphrasing can strip a watermark entirely [1].

The situation isn’t much better for images and videos. Simple edits like JPEG compression, cropping, rotation, or adding noise can degrade or destroy watermark signals [2]. Attackers can even use adversarial training by repeatedly querying APIs to figure out how to remove watermarks without damaging the visual quality of the content [1].

Open-source models add another layer of complexity. Users can simply remove the watermarking code before generating content [1]. Additionally, watermarking struggles in low-entropy scenarios – situations with limited variability, like generating code, mathematical solutions, or historical documents – because there’s not enough randomness to embed a strong statistical watermark [2]. As Siddarth Srinivasan, a Postdoctoral Fellow at Harvard University, points out:

"It takes some technical sophistication to circumvent AI detection tools and enabling the detection of a major chunk of AI-generated content can still be worthwhile" [2].

False positives are another significant issue. A 1% false-positive rate might seem low, but in sensitive fields, it’s often unacceptable [2]. Platforms like ScoreDetect attempt to address these challenges by combining watermarking with blockchain verification and web scraping, creating multiple layers of protection. However, these technical hurdles are compounded by inconsistent international standards, making cross-border enforcement even more challenging.

Differences in Copyright Laws Across Countries

The lack of uniformity in international copyright laws creates significant challenges for businesses operating globally. For example, China has already mandated watermarking for generative AI content, and the European Union’s AI Act requires disclosure and labeling of AI-generated content in specific contexts [1][2]. In contrast, the United States has largely left watermarking to the discretion of major AI companies.

This fragmented legal landscape makes enforcement costly and complex. While digital content flows freely across borders, checking for watermarks becomes a piecemeal process, as content must be evaluated against varying detection standards [2]. By December 2023, the U.S. Copyright Office had received numerous public comments on AI and copyright law, highlighting the unsettled nature of these issues [6]. Proposed U.S. regulations may also face legal challenges, particularly regarding First Amendment protections [1].

Without global standards, businesses must navigate a maze of requirements. Adopting open technical standards like C2PA or IPTC metadata can increase the chances of content being recognized by international platforms and legal systems [1].

Ethical Concerns and Misuse

Ethical issues further complicate the reliability of watermarking. Bad actors might misuse watermarks to falsely label human-created content as AI-generated or to claim ownership of work they didn’t create [2][5]. This phenomenon, known as the "liar’s dividend," allows individuals to dismiss authentic, incriminating content as AI-generated, thereby avoiding accountability [2].

Chad Heitzenrater, Senior Information Scientist at RAND Corporation, cautions:

"The watermarking algorithm itself can become a vehicle for novel and powerful attacks against the information ecosystem" [5].

Privacy concerns are another pressing issue. Watermarks could embed identifying information about the user who generated the content, increasing the risk of surveillance [2]. Moreover, detection tools may struggle with accuracy, sometimes mislabeling human content or disproportionately affecting certain groups, like non-native speakers [3]. A 2023 survey across 17 countries revealed that 71% of respondents were worried about AI-generated scams, including impersonations of public figures or friends [4]. When detection systems fail – whether by mislabeling or missing AI-generated content – they erode public trust in the broader information ecosystem [5].

To address these concerns, businesses using watermarking must prioritize transparency. They should clearly disclose its use and whether it involves any user-identifying data [2]. Privacy-by-design principles can help ensure that third-party detection services handle data securely. For example, ScoreDetect leverages blockchain to create a checksum of content without storing the actual digital assets, offering verifiable proof of ownership while safeguarding privacy.

Business Benefits of AI Watermarking for Copyright Protection

AI watermarking isn’t just about enforcing copyright – it’s a powerful tool for safeguarding revenue and improving operational workflows. By embedding traceable signatures into digital content, businesses can protect their assets, streamline enforcement processes, and establish clear ownership records to strengthen their legal claims.

Protecting Revenue Streams

Watermarking acts as a frontline defense against revenue loss. By embedding traceable signatures at the point of creation, businesses can track the source of leaked or pirated files through forensic analysis. This is especially critical in industries like audio and video production, where Digital Rights Management (DRM) plays a key role [1].

Take Resemble AI, for example. In 2023, they introduced a machine-learning watermarking system specifically for synthetic speech. This innovation allows platforms to decode and identify content generated by specific models, addressing concerns like voice-based identity fraud and unauthorized use of synthetic voices [1]. Similarly, Google’s SynthID-Text has shown that large-scale detection of watermarked content can be done efficiently, allowing businesses to monitor millions of outputs for copyright compliance without compromising performance [3].

Automating Content Monitoring and Takedowns

Enforcing copyright manually can be both costly and time-consuming. Watermarking simplifies this process by making content machine-detectable, enabling automated systems to identify and remove unauthorized material [1]. Unlike visible watermarks that can be easily altered or erased, invisible watermarks remain intact throughout distribution, ensuring reliable verification of content origin.

Platforms like ScoreDetect take this a step further by combining invisible watermarking with intelligent web scraping tools. With a 95% success rate in bypassing anti-scraping measures, ScoreDetect automates the discovery of infringing content. Once detected, its analysis tools provide quantitative proof of unauthorized usage, and automated delisting notices achieve a takedown rate of over 96%. Additionally, ScoreDetect integrates with over 6,000 web apps via Zapier, triggering coordinated actions when infringements are identified. For WordPress users, the platform’s plugin automatically captures published or updated articles, creating verifiable ownership proof while also boosting SEO through enhanced Google E-E-A-T signals.

Adding Trust with Blockchain Technology

Blockchain adds another layer of credibility by creating an immutable audit trail for ownership claims. Technologies like C2PA (Coalition for Content Provenance and Authenticity) cryptographically bind metadata to media, establishing a verifiable record of its creator and origin [1]. Tim Bernard from Unitary explains:

"C2PA is a means to cryptographically bind a metadata manifest to a piece of media (image, audio, video) to establish who created it and any additional information" [1].

ScoreDetect also incorporates blockchain by capturing checksums of content without storing the actual digital assets. This approach addresses privacy concerns while still providing verifiable proof of ownership.

The benefits extend beyond legal protection. A 2023 Microsoft poll across 17 countries revealed that 71% of respondents were concerned about scams involving AI, and 87% worried about risks like deepfakes or misinformation [4]. By offering cryptographically verifiable ownership records, businesses can clearly distinguish authentic content from fakes, building trust with customers and partners in an increasingly skeptical digital environment. These advancements pave the way for stronger copyright protection as the technology continues to evolve.

The Future of AI Watermarking in Copyright Protection

Key Takeaways

AI watermarking has evolved into a game-changing tool, embedding traceable signatures directly at the point of content creation [8]. These invisible watermarks are now reshaping how ownership is verified. They offer businesses three major benefits: clear proof of ownership backed by blockchain verification, cost savings through automated enforcement that minimizes manual monitoring, and protection of revenue by tracing the source of leaked content using forensic analysis.

In January 2026, Google successfully integrated SynthID-Text into its Gemini systems, watermarking around 20 million responses using Tournament sampling. This implementation introduced only minimal latency and had no measurable impact on user feedback rates, demonstrating that precise watermarking can scale effectively without compromising the user experience [3]. Despite these advancements, challenges persist. As of late 2025, only 38% of AI image generators had adopted adequate watermarking practices, and just 18% had implemented deepfake labeling [9]. These gaps highlight the need for stronger enforcement standards moving forward.

What Comes Next

The future of copyright protection hinges on the development of universal detection standards. Current detection systems are largely proprietary, making it difficult to efficiently monitor content at scale [2]. Siddarth Srinivasan, a Postdoctoral Fellow at Harvard University, emphasizes:

"A realistic objective is to raise the barrier to evading watermarks so the majority of AI-generated content can be identified" [2].

Regulatory frameworks are already pushing this evolution forward. The 2024 EU AI Act, for instance, requires labeling of AI-generated content, making watermarking a legal obligation rather than a voluntary feature [9]. Future advancements in watermarking technology will focus on maintaining content quality while ensuring detectability. Cryptographic standards like C2PA, which securely bind metadata to media, are gaining prominence [3][1].

As businesses continue to benefit from automated monitoring, the next step is to establish universal detection standards. By combining invisible watermarking with blockchain verification, companies can build stronger, multi-layered defenses against copyright infringement.

FAQs

How does AI watermarking help protect digital content from copyright infringement?

AI watermarking protects digital content by embedding hidden, machine-readable markers directly into files. These markers allow creators to verify ownership and track unauthorized use, even if the content has been edited or modified.

With this technology, creators and businesses can better identify and address copyright violations, safeguarding their intellectual property while preserving the original content’s quality.

What challenges come with using AI watermarking for copyright protection?

AI watermarking comes with its fair share of challenges, especially when used for copyright protection. One of the biggest hurdles is finding the right balance between robustness, fidelity, and capacity. Robustness refers to the watermark’s ability to withstand edits like compression or resizing. Fidelity focuses on ensuring the watermark doesn’t noticeably alter the original content. Capacity, on the other hand, determines how much data the watermark can carry. Achieving harmony between these factors is no small feat and requires technical precision.

Another obstacle lies in tailoring watermarks to different types of media – whether it’s images, audio, video, or text. The watermark needs to remain detectable even after modifications, which adds another layer of complexity. On top of that, developing algorithms that are scalable, efficient, and capable of operating in real time without sacrificing quality or speed is a significant technical challenge.

As AI models and content manipulation techniques grow more sophisticated, watermarking solutions must evolve to keep pace with new methods of tampering. This constant need for innovation, paired with ensuring compliance with legal and regulatory standards, makes implementing AI watermarking a tough but essential task in protecting copyrighted material.

How is AI watermarking treated under copyright laws in different countries?

AI watermarking is viewed differently across the globe, with the United States primarily addressing it through existing copyright laws. In the U.S., copyright protection generally applies when a human author has added creative input to a work, even if AI was part of the process. On the other hand, works created entirely by AI, without any human contribution, are typically not eligible for copyright protection.

As for AI watermarking itself, its legal standing remains a work in progress. In the U.S., watermarking is being explored as a way to verify AI-generated content and establish authorship. However, its recognition largely hinges on whether it can be considered a valid digital signature under copyright law. Internationally, the approach to AI watermarking varies – some countries are beginning to explore regulations, but there’s no universal framework in place. As technology and policies evolve, AI watermarking could become an increasingly important tool for safeguarding digital content.