Audio watermarking embeds hidden data into sound files to protect intellectual property. However, desynchronization attacks – like time-scale modification, cropping, and resampling – can distort the audio, making the watermark undetectable without removing it. This creates major challenges for copyright protection, especially in "blind" watermarking systems that don’t rely on original audio for recovery.

Key points:

- Desynchronization attacks disrupt timing or frequency alignment, confusing detection systems.

- Common methods include time-scale modification (±50%), cropping, and pitch shifts.

- Blind watermarking systems, used in 90% of cases, are particularly vulnerable.

- Advanced attacks, like neural vocoder resynthesis, can strip watermarks entirely, though AI improves audio watermarking accuracy in many other scenarios.

Recent research highlights:

- No current watermarking system resists all attack types.

- Neural vocoder resynthesis caused up to 50% Bit Error Rate (BER) in leading systems like WavMark.

- Solutions like AWARE and SyncGuard show promise by reducing BER and addressing synchronization issues.

The article explores these challenges and emerging solutions, emphasizing the need for stronger protections against evolving attack methods.

UOC’s Audio Watermarking System, High-fidelity recovery under extreme conditions

sbb-itb-738ac1e

Types of Desynchronization Attacks

Attackers typically rely on three main approaches to disrupt audio watermarking systems: time-domain attacks, frequency-domain attacks, and hybrid attacks. Each method targets a different aspect of the audio signal, causing watermark detection systems to fail despite the embedded data technically remaining intact. Let’s dive into how each type works and their specific impacts.

Time-domain attacks focus on altering the timing structure of audio signals. Techniques like Time-Scale Modification (TSM) adjust the audio’s duration without affecting its pitch, similar to changing playback speeds. Resampling modifies the sampling rate, leading to temporal shifts that misalign the watermark. Other methods, such as cropping and jitter attacks, tamper with audio segments or sample counts. For example, a study published in March 2020 by Wenhuan Lu and Ling Li in IEEE Access demonstrated that their Robust Feature Points Scheme (RFPS) achieved a 0% bit error rate in extracting watermarks even under non-uniform cropping attacks [1]. These attacks exploit the reliance of detection systems on precise timing references, effectively disrupting the synchronization needed to extract the watermark.

On the other hand, frequency-domain attacks manipulate the spectral characteristics of the audio. Pitch shifting changes the pitch while preserving the tempo, shifting the watermark signal out of its expected frequency range. Spectral filtering, using techniques like low-pass, high-pass, or notch filters, removes specific frequency bands where psychoacoustic audio watermarks are embedded. A study led by Kosta Pavlović at deepmark.me in October 2025 revealed the vulnerability of watermarking systems to such attacks. Under a 55-cent pitch shift, the WavMark system experienced a 50.00% Bit Error Rate (BER), while band-stop filtering caused AudioSeal’s BER to rise to 33.81% [6]. As Pavlović explained:

Reliably detecting synchronization markers is nearly as difficult as extracting the watermark itself [6].

This highlights how frequency-domain modifications can severely hinder watermark detection, making it a complex challenge for existing systems.

Lastly, hybrid attacks combine multiple distortion techniques, creating the most formidable challenge for watermarking systems. For instance, Resample TSM alters both the duration and pitch simultaneously, while splicing rearranges segments from various sources. Neural vocoder resynthesis, using AI systems like BigVGAN, processes audio through resynthesis pipelines that effectively strip traditional watermarks. Research has tested watermarking schemes against scaling factors of up to ±50% for both TSM and pitch-invariant modifications [2]. In August 2025, Zhenliang Gan’s team introduced SyncGuard at the ECAI conference, a system that uses frame-wise broadcast embedding to extract watermarks from arbitrary-length segments without requiring precise localization [3].

The strength of hybrid attacks lies in their ability to disrupt synchronization across multiple dimensions at once. While single-domain attacks might leave some detection pathways intact, hybrid techniques systematically eliminate the reference points needed for extraction algorithms, making watermark recovery nearly impossible without advanced countermeasures. These defenses are critical components of broader digital piracy solutions designed to maintain content integrity.

Research on Audio Watermarking Vulnerabilities

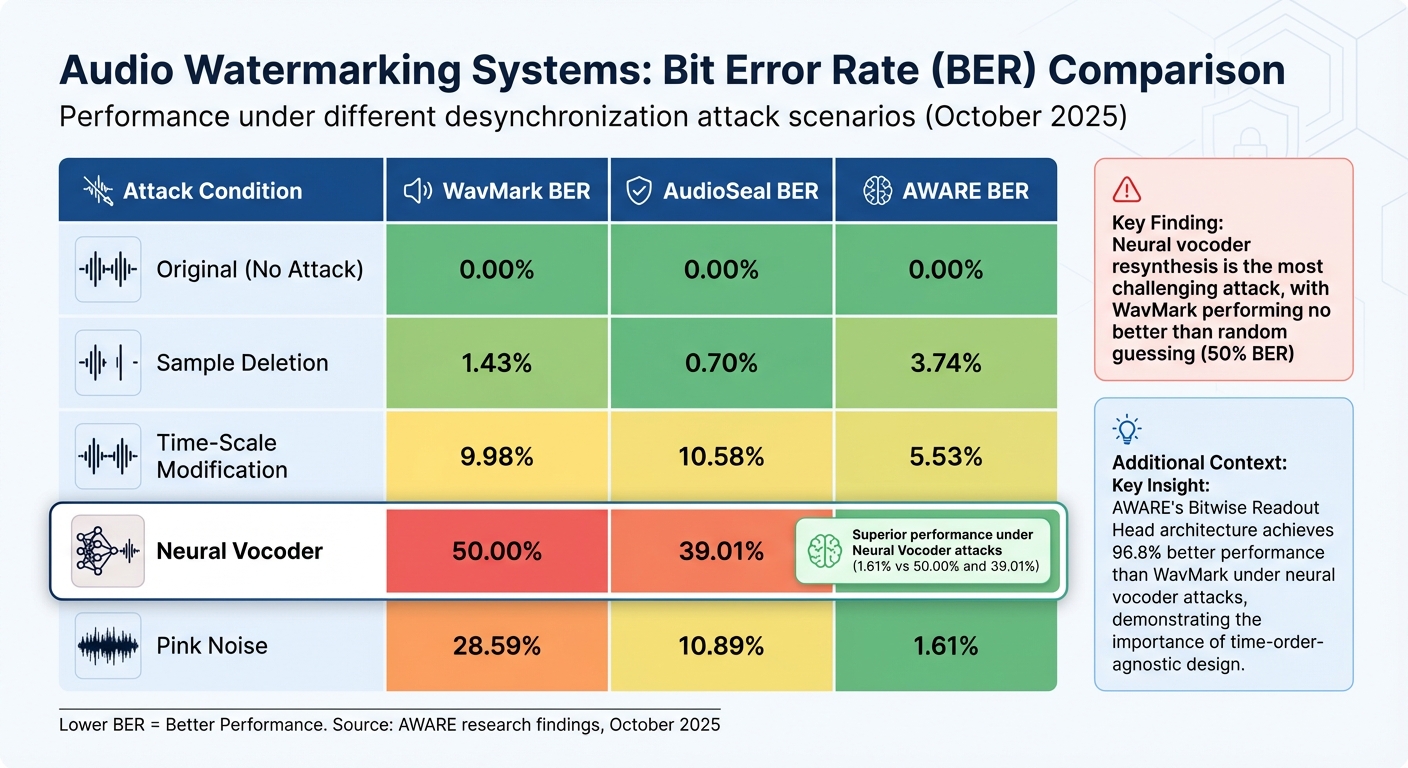

Audio Watermarking Systems Performance Under Desynchronization Attacks

Testing Results from Watermarking Schemes

Recent large-scale tests have brought to light the weaknesses in current audio watermarking technologies. In 2025, researchers evaluated 22 watermarking schemes against 109 different attack configurations, ranging from basic signal processing techniques to distortions powered by AI. The outcome? None of the existing technologies could withstand all practical distortions [7]. Yizhu Wen and colleagues put it plainly:

None of the surveyed watermarking schemes is robust enough to withstand all tested distortions in practice [7].

The numbers speak volumes. For example, when Kosta Pavlović’s team assessed leading systems in October 2025, WavMark’s Bit Error Rate (BER) skyrocketed to 50.00% under neural vocoder resynthesis, rendering its performance no better than random guessing. Meanwhile, AudioSeal recorded a BER of 39.01% under the same conditions. Adding to these concerns, research presented at the AAAI 2026 conference revealed that overwriting attacks could achieve nearly 100% success rates against the most advanced neural watermarking methods. These attacks allowed adversaries to replace legitimate watermarks with forged ones [4].

| Attack Condition | WavMark BER (%) | AudioSeal BER (%) | AWARE BER (%) |

|---|---|---|---|

| Original (No Attack) | 0.00 | 0.00 | 0.00 |

| Sample Deletion | 1.43 | 0.70 | 3.74 |

| Time-Scale Modification | 9.98 | 10.58 | 5.53 |

| Neural Vocoder | 50.00 | 39.01 | 1.61 |

| Pink Noise | 28.59 | 10.89 | 1.61 |

Source: Data adapted from AWARE research findings (October 2025) [6].

These results highlight critical flaws in the design of current watermarking systems, leaving them vulnerable to a wide range of attacks, necessitating robust IP copyright protection strategies.

Why Current Technologies Struggle Against Advanced Attacks

The core issue lies in the design and architecture of these systems. Deep learning-based watermarking methods, while seemingly robust during controlled testing, often overfit to the specific attack scenarios they were trained on. This makes them ineffective when confronted with new, unforeseen perturbations. As Kosta Pavlović explained:

DL-based systems tend to overfit to the attack set seen during training and generalize poorly when novel perturbations are introduced [6].

Generative AI tools, such as neural vocoders and voice cloning systems like BigVGAN, present another significant challenge. These technologies can resynthesize audio from scratch, effectively stripping away the subtle modifications that watermarking relies on. Furthermore, traditional architectures that depend on Fully Connected (FC) layers are inherently limited. These systems tie watermark detection to fixed input positions and lengths, making them highly susceptible to disruptions like sample deletion or splicing. In one study, conventional FC-based models experienced a BER of 30.91% under sample deletion attacks, compared to just 3.74% for architectures designed with time-order invariance [6].

The combination of these architectural shortcomings and the rapid evolution of attack methods underscores the urgent need for improved solutions. As Lingfeng Yao and colleagues stated in their AAAI 2026 paper:

The practicality and effectiveness of the proposed overwriting attacks expose security flaws in existing neural audio watermarking systems, underscoring the need to enhance security in future audio watermarking designs [4].

Solutions to Combat Desynchronization Attacks

Watermarking Techniques That Resist Attacks

Researchers have introduced methods to tackle desynchronization attacks effectively. One standout approach is AWARE (Audio Watermarking via Adversarial Resistance to Edits), which uses a Bitwise Readout Head (BRH) to gather temporal evidence into per-bit scores. This makes watermark detection independent of frame order or position. In tests using the VCTK and LibriSpeech datasets at 16 kHz, AWARE achieved a 0.00% BER under low-pass and high-pass filtering and a 1.61% BER after resynthesis through the BigVGAN neural vocoder. In comparison, other methods like AudioSeal and WavMark recorded much higher BERs of 39.01% and 50.00%, respectively [6].

Other techniques also show promise. SyncGuard, with its frame-wise broadcast embedding, eliminates the need for precise watermark localization, addressing a key vulnerability [3]. Meanwhile, the RASE approach, which combines feature point detection with stationary wavelet transform, demonstrates resilience against time-scale modifications and pitch shifts of up to ±50%, all while maintaining high audio quality [2].

AWARE also stands out for its performance under sample deletion attacks, reducing BER from 30.91% to 3.74% by leveraging time-order invariance [6]. As Kosta Pavlović noted:

Robustness largely stems from the detector architecture and the BRH [Bitwise Readout Head]. Conversely, competing systems often see (and thus favor) certain distortions during training, which helps in those particular cases but can leave gaps elsewhere. [6]

These advancements allow for a clearer comparison of how each method performs under different attack scenarios.

Comparing Different Methods

The table below highlights the strengths and limitations of these techniques. AWARE’s time-order-agnostic design excels against temporal cuts and neural vocoder resynthesis, though it slightly trails AudioSeal in PESQ/STOI scores. SyncGuard effectively handles variable-length audio segments without requiring localization, but its implementation involves complex training processes. RASE/SWT stands out for its robustness against extreme time-scale and pitch changes by relying on stable feature points rather than learned models [2][6].

| Method | Mechanism | Strength | Weakness |

|---|---|---|---|

| AWARE | Bitwise Readout Head (BRH) | Handles temporal cuts and neural vocoder attacks [6] | Slightly lower PESQ/STOI scores compared to AudioSeal [6] |

| SyncGuard | Frame-wise broadcast embedding | Manages variable-length audio; no localization needed [3] | Requires complex training with dilated residual/gated blocks [3] |

| RASE/SWT | Feature point detection + SWT | Extremely robust to time-scale and pitch shifts (±50%) [2] | Depends on the stability of detected feature points [2] |

| AudioSeal | Learning-based | High audio quality (PESQ 4.32) [6] | Vulnerable to spectral edits and neural vocoders [6] |

| WavMark | Learning-based | Good audio quality [6] | Fails under coarse quantization and MP3 compression [6] |

How ScoreDetect Protects Against These Attacks

ScoreDetect builds on these advanced watermarking techniques with a dual-layer strategy: robust watermark embedding and AI-driven content matching. This approach directly addresses the vulnerabilities of traditional systems.

Its watermarking process ensures resilience against cropping, compression, and format conversions, making the watermark undetectable while still effective. This means protection remains intact even after significant audio modifications.

On top of that, ScoreDetect includes AI-powered content recognition. This layer identifies protected audio even when synchronization is disrupted, bypassing the need for perfect alignment. By combining these two methods, ScoreDetect offers a comprehensive solution for industries like media, entertainment, education, and research, ensuring audio content stays protected against evolving desynchronization attacks.

Conclusion and Future Directions

Key Takeaways

Desynchronization attacks remain a major challenge for audio watermarking systems. These attacks – whether through simple techniques like temporal cuts or advanced methods like neural vocoder resynthesis – disrupt watermark detection by breaking the alignment between the embedded data and extraction algorithms. Since detecting synchronization markers is almost as complex as extracting the watermark itself, traditional methods often fall short [6].

However, new approaches are changing the game. For instance, AWARE demonstrates a 1.61% bit error rate (BER) after neural vocoder resynthesis, compared to WavMark’s 50.00% [6]. Techniques like feature point detection and frame-wise broadcast embedding reduce reliance on precise localization, addressing long-standing vulnerabilities. Additionally, ScoreDetect’s dual-layer strategy significantly improves resilience to desynchronization attacks. These advancements highlight the importance of continuous innovation to counter evolving threats.

Future Challenges and Research Needs

While progress has been made, critical gaps remain. Many learning-based systems still struggle with overfitting to simulated attack scenarios, leaving them exposed to distortions that fall outside these predefined conditions [6]. The growing prevalence of generative AI and high-fidelity voice cloning introduces new risks, as these technologies can reconstruct audio signals and effectively erase watermarks. As Kosta Pavlović aptly observed:

Audio watermark decoders require architectural mechanisms that are intrinsically robust to common edits and temporal misalignments. If the architecture is not inherently robust, no amount of augmentation or attack-layer engineering will make training reliably effective. [6]

Another pressing challenge lies in addressing montage-like edits, where segments from different audio sources are combined, disrupting global synchronization – a limitation in many current designs [6]. Furthermore, ensuring fair performance across demographics, including different languages, biological sexes, and age groups, is becoming increasingly important [5].

As attackers continue to refine their methods, single-layer defenses may no longer suffice. Solutions like ScoreDetect, which integrate watermarking with AI-powered content recognition tools, will likely play a critical role in building multi-layered protections against these emerging threats. Continued research and development will be essential to stay ahead of these evolving challenges.

FAQs

What impact do desynchronization attacks have on blind audio watermarking systems?

Desynchronization attacks pose a serious threat to blind audio watermarking systems by causing a mismatch between the embedded watermark and the original audio. This misalignment is often the result of actions like time-shifting, cropping, or other alterations, making it difficult for the system to accurately detect and verify the watermark. As a result, the system’s ability to confirm ownership or authenticity is compromised.

To combat these issues, researchers are exploring advanced solutions, including robust feature point detection and synchronization recovery techniques. These approaches are designed to maintain the watermark’s integrity, even when the audio undergoes manipulation, offering stronger safeguards against unauthorized use.

How does neural vocoder resynthesis improve the defense against desynchronization attacks in audio watermarking?

Neural vocoder resynthesis offers a powerful way to defend against desynchronization attacks by producing audio that sounds almost identical to the original, even after edits or distortions. Unlike older methods that rely on watermarks prone to timing shifts or distortions, neural vocoders recreate audio in a way that keeps the watermark intact while maintaining excellent sound quality.

Recent developments highlight how neural vocoders can preserve embedded watermarks even through complex changes like temporal cuts or signal modifications. This durability comes from their ability to create high-quality audio while protecting the watermark’s key attributes, making it much more difficult for attackers to remove or alter the watermark without noticeably degrading the audio.

How do techniques like AWARE and SyncGuard help protect against desynchronization attacks in audio watermarking?

Techniques like AWARE and SyncGuard are pushing the boundaries of protection against desynchronization attacks, making audio watermarking systems tougher and more dependable.

AWARE (Audio Watermarking with Adversarial Resistance to Edits) takes a smart approach by embedding watermarks using advanced optimization in the time–frequency domain. Instead of relying on limited attack simulations, it uses a detection method that combines temporal evidence into a single score for each watermark bit. This method allows it to decode watermarks even after edits like cuts or shifts, maintaining low error rates across a range of distortions.

SyncGuard, on the other hand, zeroes in on stable feature points within the audio signal – points that remain consistent despite temporal edits. By anchoring to these synchronized points, it preserves watermark integrity, even when facing aggressive desynchronization attempts.

Together, these techniques strengthen watermarking systems, ensuring dependable protection for digital audio content in applications where security is paramount.